Hello! I'm Alexa, a Research Scientist at Adobe Research.

My research interests are primarily in the fields of Human-Computer Interaction & Accessibility. I investigate how multimodal perception can improve how we understand and interact with information.

The results of my work have led to novel haptic interfaces that aim to make spatial information more accessible for people who are blind. Application areas of interest include supporting design, collaboration, information visualization and VR/AR.

Prior to Adobe, I was part of the shape lab and the HCI Group at Stanford University, advised by Prof. Sean Follmer. Previously, I completed my M.S. in Mechanical Engineering also at Stanford and my B.S. in Biomedical Engineering at Georgia Tech with a minor in Computer Science.

** If you are a PhD or undergraduate student looking for summer internship opportunities or collaborations, send me an email with your interests.

Contact:

asiu@adobe.com

Follow @alexafay

Google Scholar

Latest Research

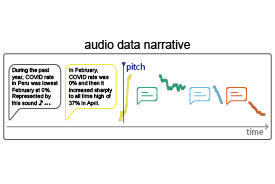

Supporting Accessible Data Visualization Through Audio Data Narratives

To address the need for accessible data representations on the web that provide direct, multimodal, and up-to-date access to live data visualization, we investigate audio data narratives –which combine textual descriptions and sonification (the mapping of data to non-speech sounds). We present a dynamic programming approach to generate data narratives considering perceptual strengths and insights from an iterative co-design process. Data narratives support users in gaining significantly more insights from the data.

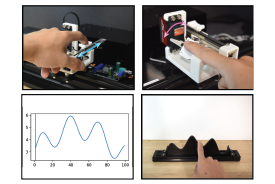

Slide-Tone and Tilt-Tone: 1-DOF Haptic Techniques for Conveying Shape Characteristics of Graphs to Blind Users

To improve interactive access to data visualizations, we introduce two refreshable, 1-DOF audio-haptic interfaces based on haptic cues fundamental to object shape perception. These devices provide finger position, fingerpad contact inclination, and sonification cues. Our research offers insight into the benefits, limitations, and considerations for adopting these haptic cues into a data visualization context.

COVID-19 Highlights the Issues Facing Blind and Visually Impaired People in Accessing Data on the Web

Dissemination of data on the web has been vital in shaping the public’s response during the COVID-19 pandemic. We postulated the increased prominence of data might have exacerbated the accessibility gap for the Blind and Visually Impaired (BVI) community and exposed new inequities. Based on a survey (n=127) and contextual inquiry (n=12), we present observations that provide an understanding of the impact access or inaccess has on the BVI community and implications for improving the technologies and modalities available to disseminate data-driven information on the web.

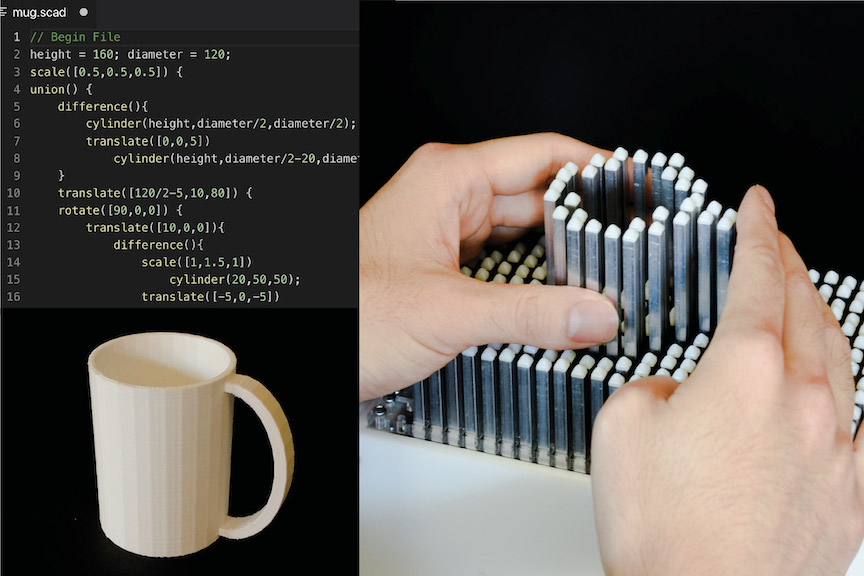

shapeCAD: An Accessible 3D Modelling Workflow for the Blind and Visually-Impaired Via 2.5D Shape Displays

We describe our participatory design process towards designing an accessible 3D modelling workflow for the blind and visually-impaired. We discuss interactions that enable blind users to design, program, and create using a 2.5D interactive shape display.

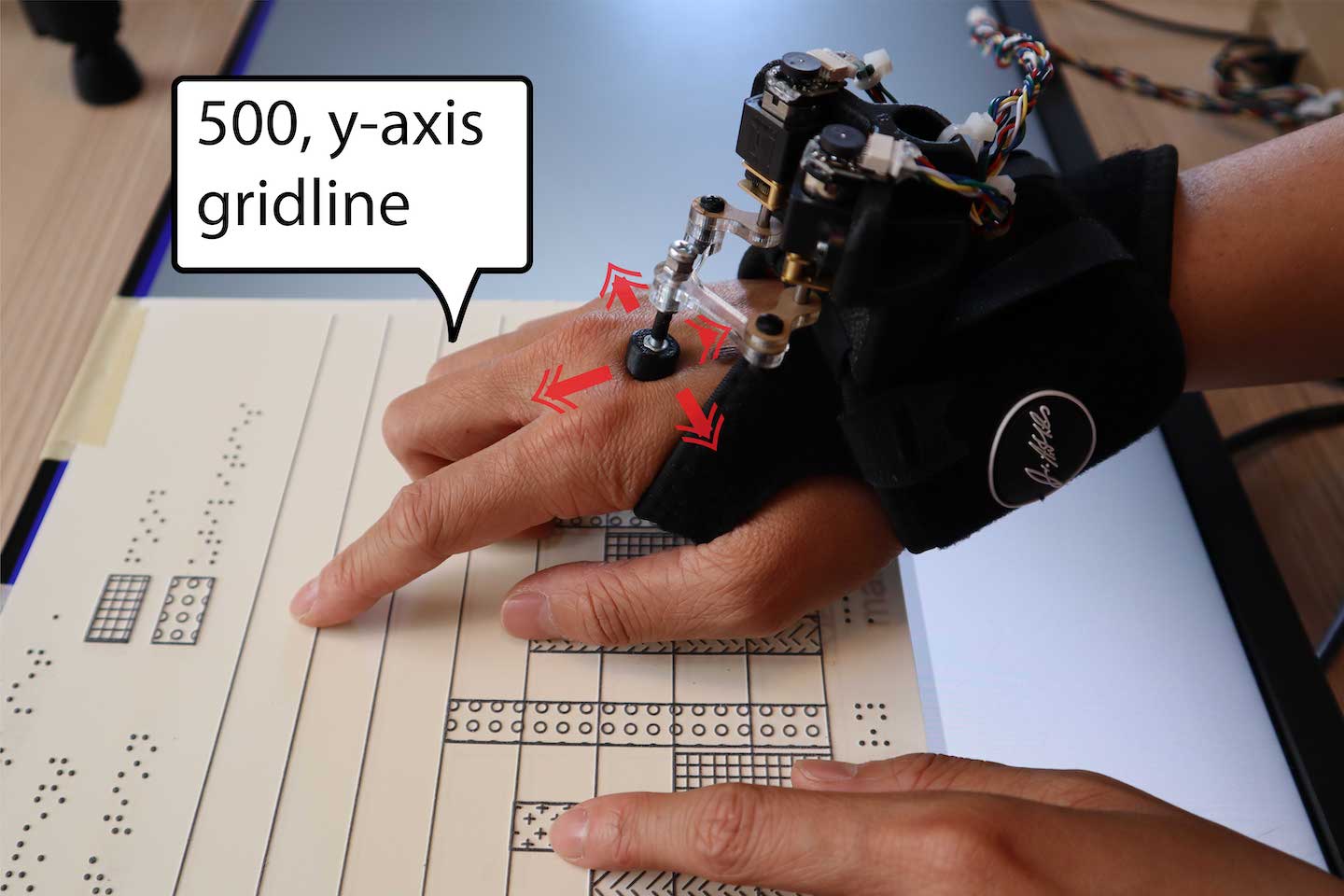

PantoGuide: A Haptic and Audio Guidance System To Support Tactile Graphics Exploration

Tactile graphics interpretation is an essential part of building tactile literacy and often requires individualized in-person instruction. PantoGuide is a low-cost system that provides audio and haptic guidance cues while a user explores a tactile graphic. We envision scenarios where PantoGuide can enable students to learn remotely or review class content asynchronously.

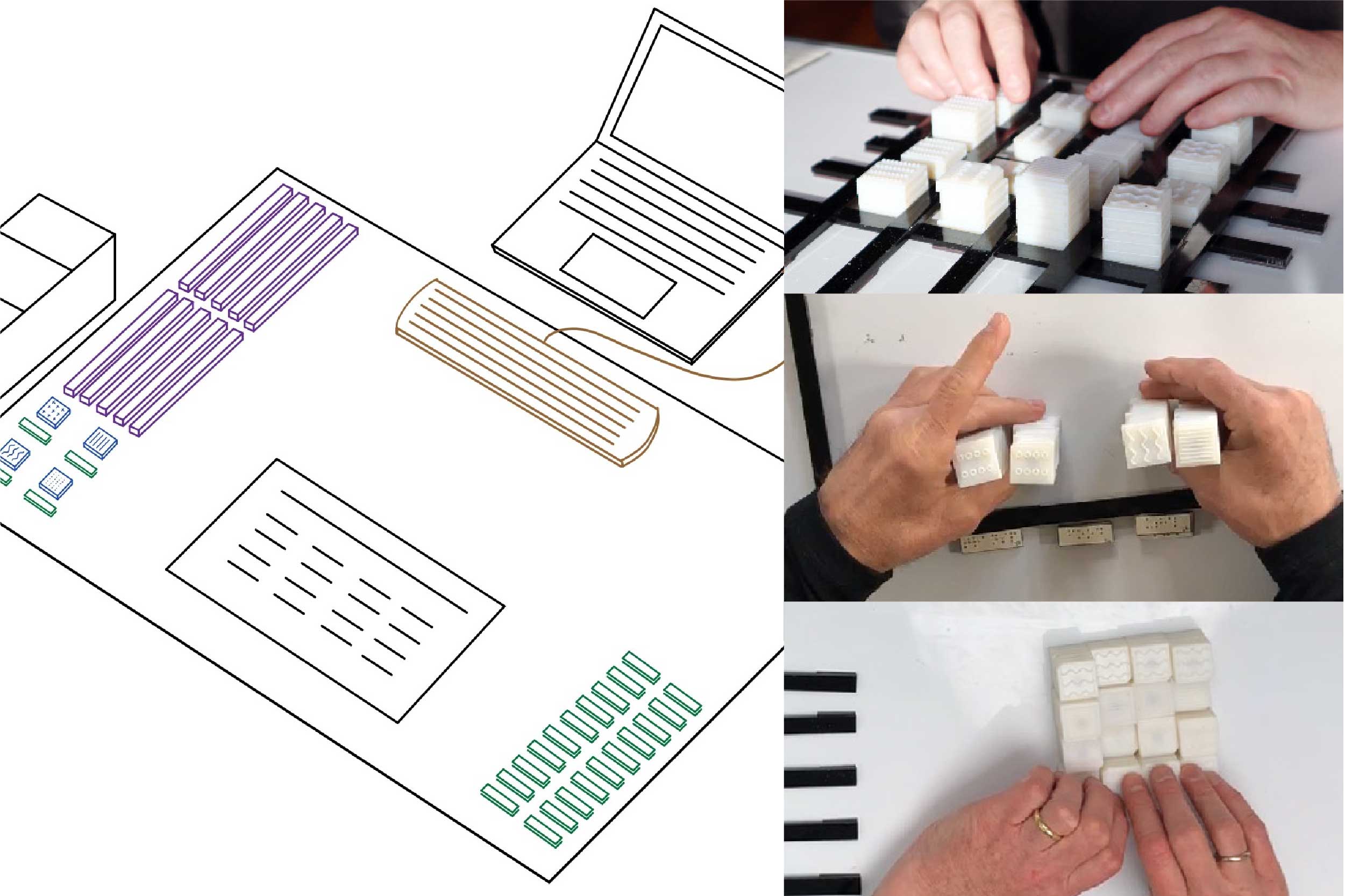

Constructive Visualization to Inform the Design and Exploration of Tactile Data Representations

As data visualization has become increasingly important in our society, many challenges prevent people who are blind and visually impaired (BVI) from fully engaging with data graphics. We adpt a constructive visualization framework, using simple and versatile tokens to engage non-data experts in the construction of tactile data representations.

Haptic PIVOT: On-Demand Handhelds in VR

PIVOT is a wrist-worn haptic device that renders virtual objects into the user’s hand on demand. Its simple design comprises a single actuated joint that pivots a haptic handle into and out of the user’s hand, rendering the haptic sensations of grasping, catching, or throwing an object – anywhere in space .

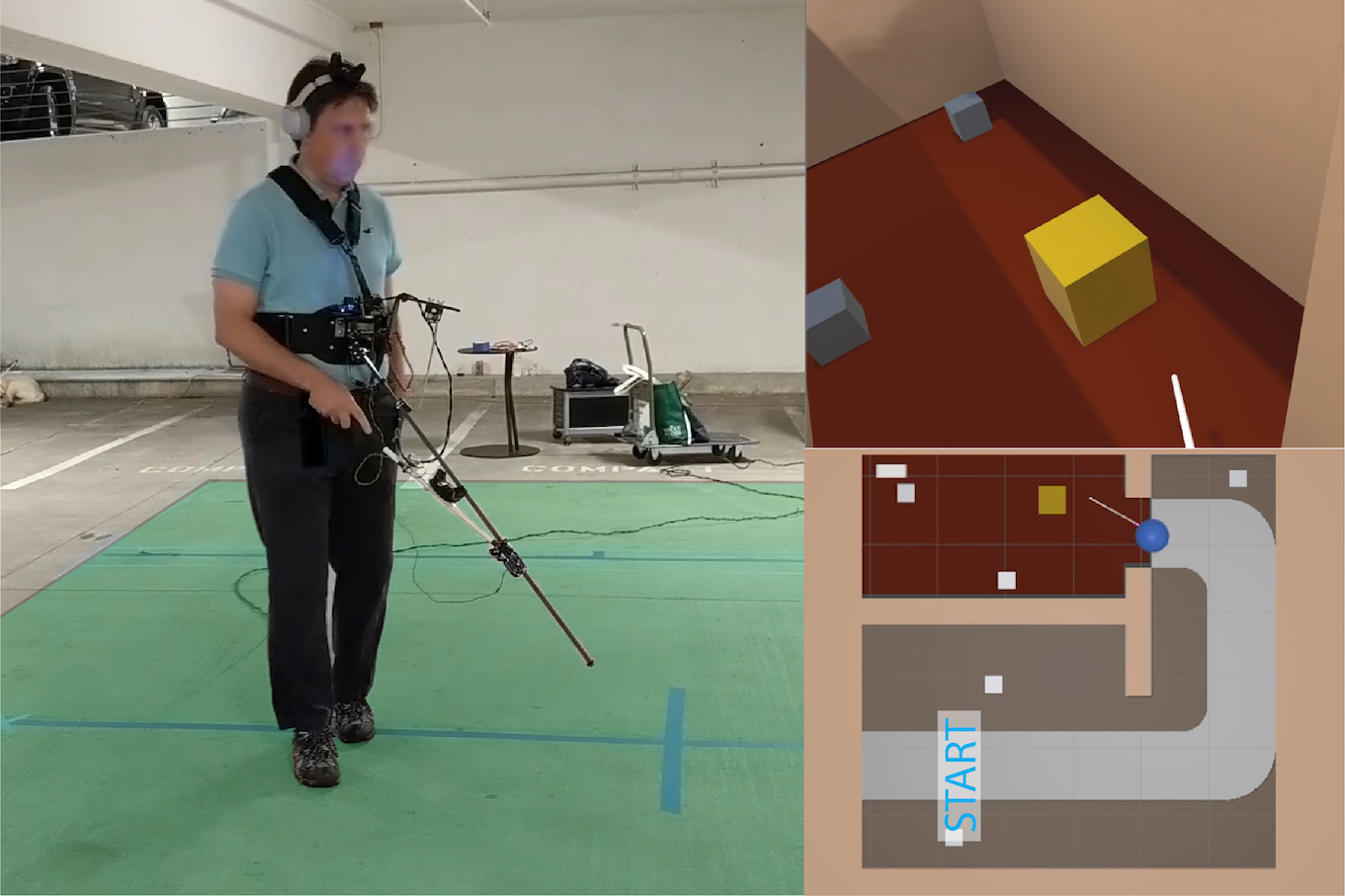

Virtual Reality Without Vision: A Haptic and Auditory White Cane to Navigate Complex Virtual Worlds

How might virtual reality (VR) aid a blind person in familiarization with an unexplored space? This project explores the design of an immersive VR haptic and audio experience accessible to people who are blind with the goal of facilitating orientation and mobility training.

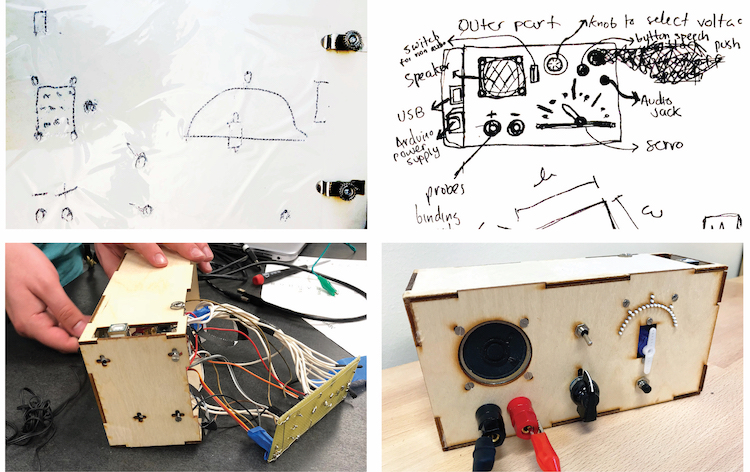

Making Nonvisually: Lessons from the Field

The Maker movement promises access to activities from crafting to digital fabrication for anyone to invent and customize technology. But people with disabilities, who could benefit from Making, still encounter significant barriers to do so. We share our personal experiences Making nonvisually and supporting its instruction through a series of workshops where we introduced Arduino to blind hobbyists.

shapeShift: A Mobile Tabletop Shape Display for Tangible and Haptic Interaction

We explore interactions enabled by 2D spatial manipulation and self-actuation of a mobile tabletop shape display (e.g. manipulating spatial-aware content, an encountered-type haptic device, etc). We present the design of a novel open-source shape display platoform called shapeShift.

Selected Press

October 2020 & April 2018

These Wrist-Worn Hammers Swing Into Your Hands So You Feel Virtual Objects

read more >>

October 2019

This Tactile Display Lets Visually Impaired Users Feel On Screen 3D Shapes

read more >>

December 2019

Stanford Researchers Develop Tactile Display to Make 3D Modeling More Accessible for Visually Impaired Users

read more >>